Impact of Multi-Epoch On LLM Training#

As large language models (LLMs) scale up, researchers have begun to notice a growing imbalance between model size and the availability of high-quality training tokens. The relationship between model parameters and training data volume is crucial:

models require increasingly more tokens to achieve optimal performance.

Question

If we’re running out of new tokens, can we simply train on the same dataset multiple times — i.e., increase the number of epochs — to get better results?

Past studies have given mixed answers. Hoffmann et al. (2022) found that repeated training on the same tokens degraded model performance. Yet Taylor (Galactica, 2022) observed that up to four epochs improved performance.

To resolve these contradictions, researchers at the National University of Singapore (NUS) conducted a systematic study, analyzing how multi-epoch training affects LLM performance.

Paper:

1. Model Size Must Match the Number of Tokens

The number of tokens required for training scales almost linearly with the number of model parameters. If you want to train a model effectively, you must provide a dataset proportional to its parameter count.

2. Repeated Epochs Reduce Model Performance

When the total number of seen tokens is fixed but epochs are increased (i.e., reusing the same data more times), model performance decreases.

Larger models are especially prone to overfitting when trained repeatedly on the same dataset. Moreover, this degradation persists even when evaluating downstream tasks — performance suffers even if the total number of training tokens remains constant.

3. Larger Datasets Mitigate Multi-Epoch Performance Drop

When the dataset is large enough, repeated training has less negative impact. For instance, models trained repeatedly on larger token sets (e.g., 229M vs 227M tokens) showed milder overfitting trends.

4. Higher Data Quality Does Not Prevent Overfitting

It might seem that using cleaner, higher-quality data could counteract overfitting from repeated epochs — but that’s not the case.

Comparing C4 (a mixed-quality dataset) and Wikipedia (higher quality), researchers found that both suffered from performance degradation after repeated training. Data quality alone cannot offset the downsides of repetition.

5. The Role of Parameters and FLOPs

Two models with the same parameter count but different FLOPs (floating-point operations) can behave differently. Higher-FLOP architectures perform slightly better but still cannot eliminate the loss caused by repeated training.

6. Small Models Overfit Just Like Large Models

Interestingly, smaller models show similar overfitting trends as large ones. This means low-compute models can be used to predict large-scale model behaviors, reducing the cost of exploring training strategies.

7. Can Multiple Training Objectives Help?

Not all training objectives respond the same way to repeated training.

- Generative objectives (like next-token prediction) degrade faster with multiple epochs.

- Discriminative objectives (like masked-language modeling) are more resistant.

Models such as UL2, which combine both types, tend to be less suitable for high-epoch training.

8. Dropout: The Overlooked Regularization for LLMs

Despite being a classic technique to prevent overfitting, dropout is rarely used in modern large models (e.g., GPT-3, PaLM, LLaMA) — mainly due to computational overhead.

Yet, dropout can significantly reduce the negative effects of multi-epoch training. The Galactica model, which successfully used 4 epochs, benefited largely from dropout regularization.

9. Gradual Dropout Works Best

Instead of applying dropout throughout the entire training process, a progressive strategy — introducing dropout in later epochs — was found to be effective and more efficient.

10. Dropout Has Different Effects on Different Model Sizes

While dropout helps smaller models, its impact on large models (>10B parameters) becomes less effective. Large models remain vulnerable to overfitting despite dropout.

11. Using MoE Models to Calibrate Dense Models

Although not directly related to epochs, researchers found that Mixture-of-Experts (MoE) architectures can serve as proxies for dense models during hyperparameter tuning. Because MoE models exhibit similar training dynamics, they can be used to estimate optimal hyperparameters before committing full compute resources.

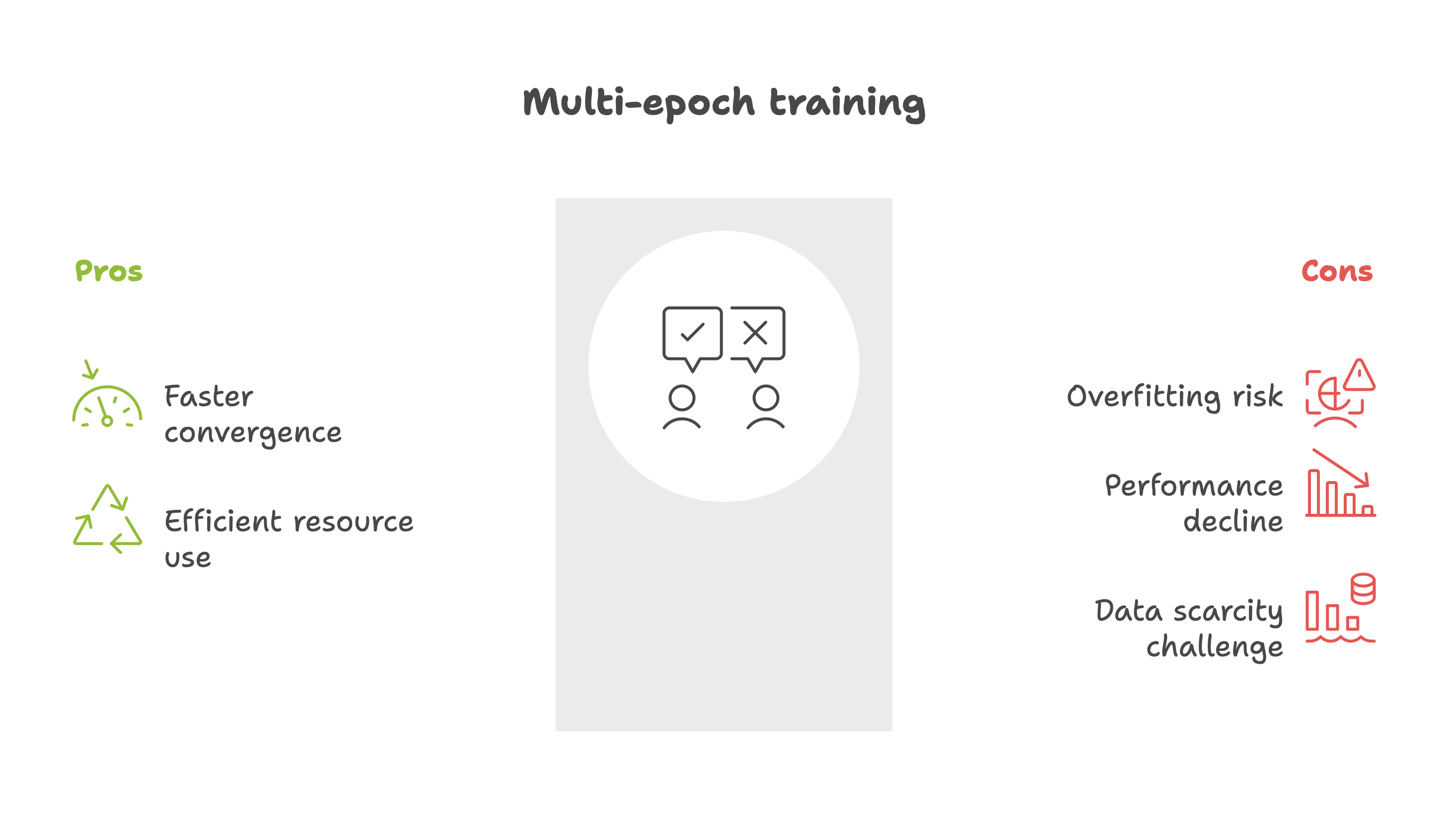

Summary: What Multi-Epoch Training Means for LLMs#

Repeated training on the same dataset hurts performance, both in pretraining and in downstream evaluations. While larger and higher-quality datasets alleviate this somewhat, they don’t eliminate the problem.

Given the limited availability of new data, future LLM development will likely face this token scarcity challenge. Regularization techniques — especially dropout — can help, though they slow training.

In short:

Multi-epoch training on repeated data accelerates overfitting, and regularization is the key to mitigating (but not solving) it.