Writing Code Is No Longer Enough#

Over the next 12 to 24 months, the differentiator among engineers will shift from mastery of programming languages like Rust, Go, or Python, or the volume of code produced, to the ability to effectively orchestrate and manage AI-driven engineering teams.

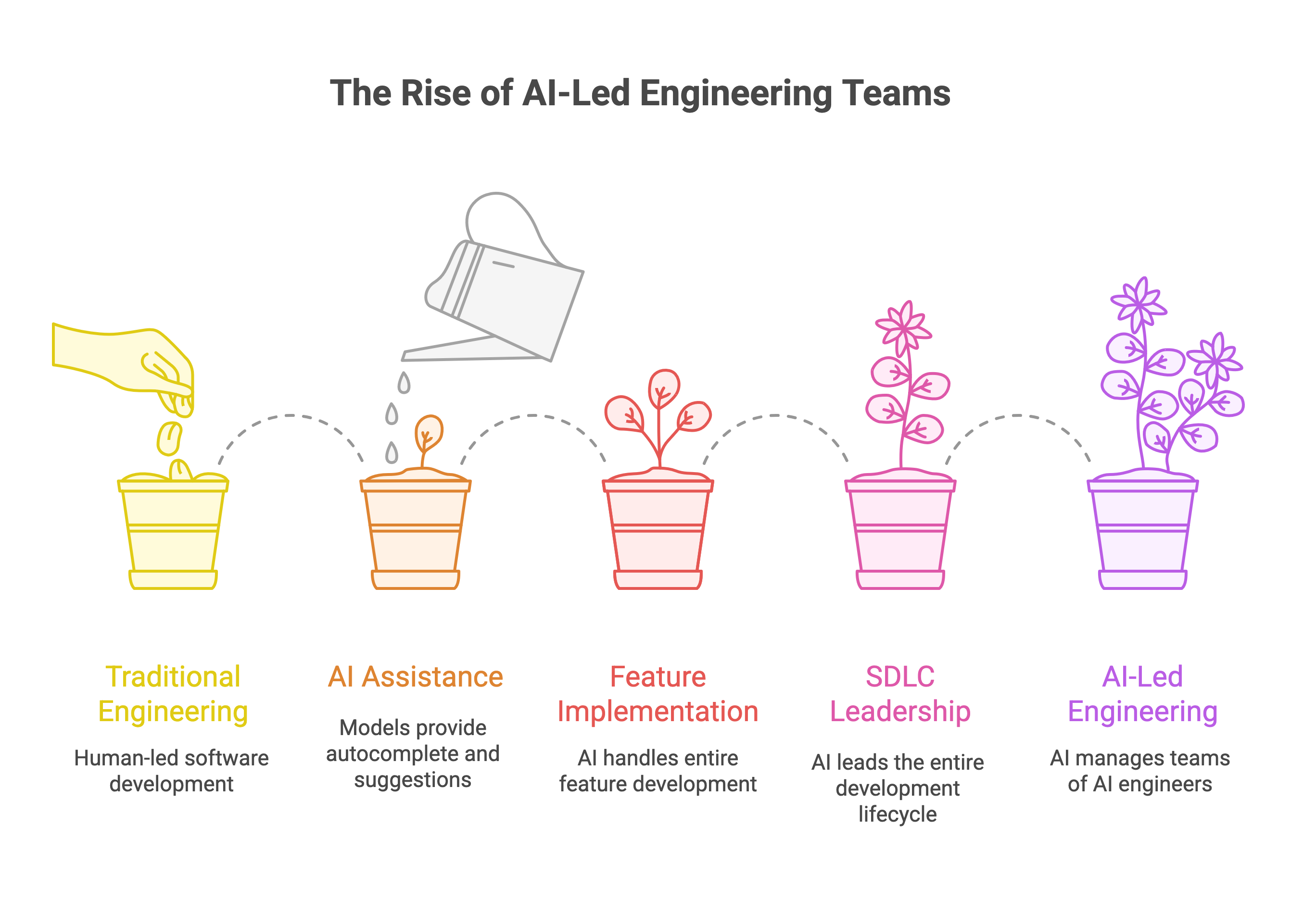

Cutting-edge models now demonstrate sustained reasoning capabilities exceeding two hours, with approximately 50% reliability—doubling every seven months in their capacity for continuous operation. Just a few years prior, these models were confined to basic autocomplete functions. Today, they are capable of independently executing entire development workflows, including coding, testing, documentation, and deployment adjustments.

This represents a transformative paradigm shift:

The entire Software Development Life Cycle (SDLC) is increasingly becoming an AI-empowered domain, redefining how software solutions are conceived and delivered.

1. From Autocomplete to "First-Line Engineer"#

Many teams still view AI coding tools in a limited way, focusing on:

- Generating function skeletons

- Writing a few SQL statements

- Drafting scripts

However, with long-context reasoning, the approach changes dramatically. The AI model no longer assists with isolated tokens; instead, it:

- Reads specifications, analyzes codebases, examines logs, and executes tests

- Generates implementation plans and breaks down tasks

- Produces changesets that are ready for diffs, complete with pull request descriptions and documentation

If you are still using these tools as merely a "smarter autocomplete," you are not fully leveraging what is essentially a junior engineer capable of independent execution.

Moreover, these models are not standalone. They can integrate with:

- Integrated Development Environments (IDEs)

- Command-Line Interfaces (CLIs)

- Issue trackers

- Logging and monitoring systems

- Continuous Integration/Continuous Deployment (CI/CD) pipelines

These AI agents won't just reside within your code editor; they will become part of your entire engineering stack.

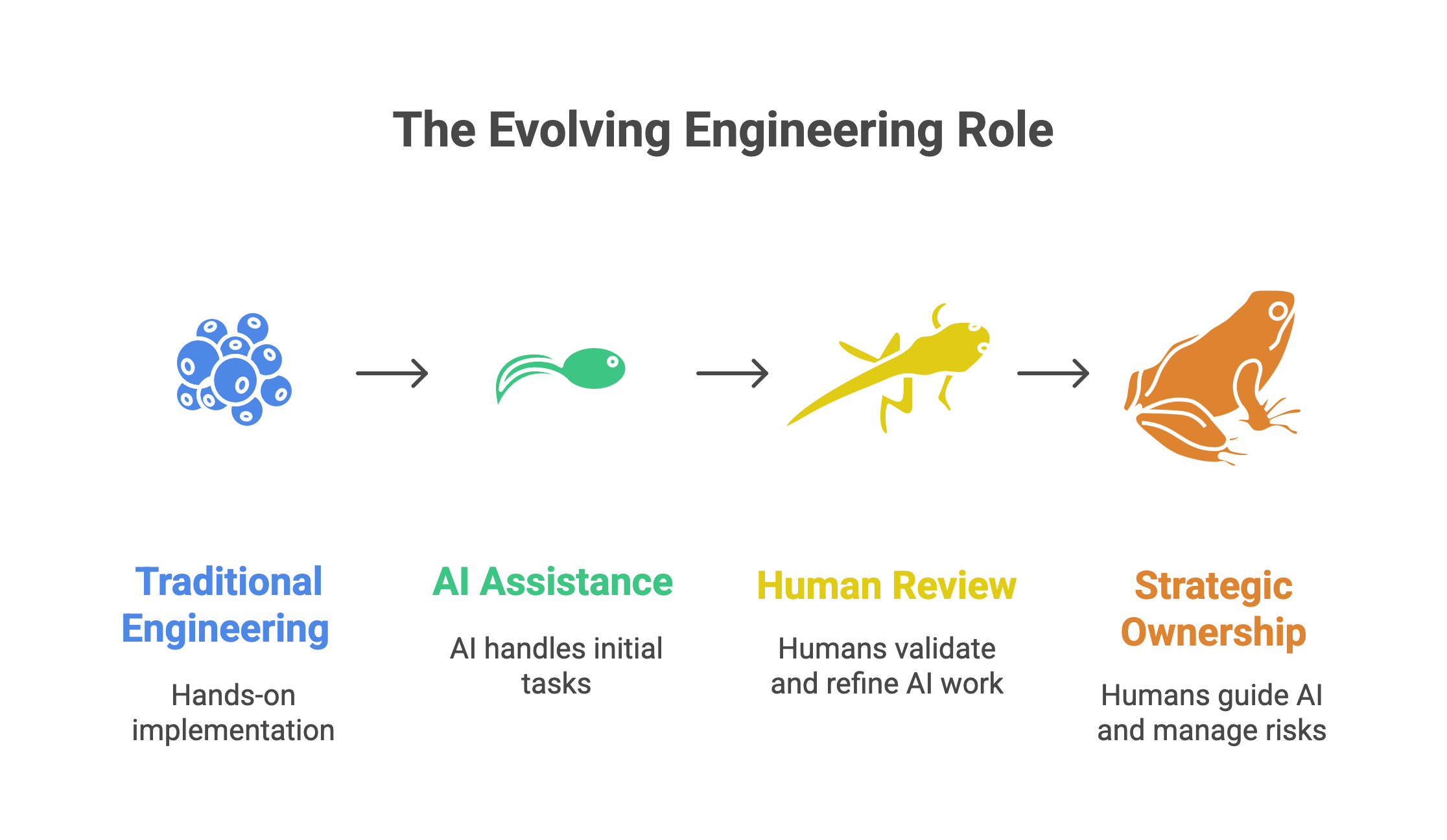

2. The Engineer’s New Role: Delegate, Review, Own#

OpenAI provides a helpful framework:

Delegate → Review → Own.

This serves as a blueprint for the future of engineering roles.

| Phase | Responsibility | Scope of Action |

|---|---|---|

| Delegate | AI Agent | Responsible for initial implementation tasks, including coding, testing, updating documentation, breaking down issues, and estimating dependencies. |

| Review | Human Engineer | Validates the architectural integrity, performs sanity checks on major migrations, assesses hidden risks, and verifies boundary correctness. |

| Own | Human Engineer | Holds ultimate responsibility for strategic direction, prioritization, risk mitigation, and long-term system maintainability. |

Consider AI agents as fast, high-output junior engineers who may occasionally make mistakes. You aren’t being replaced; instead, you are being promoted to lead and guide them.

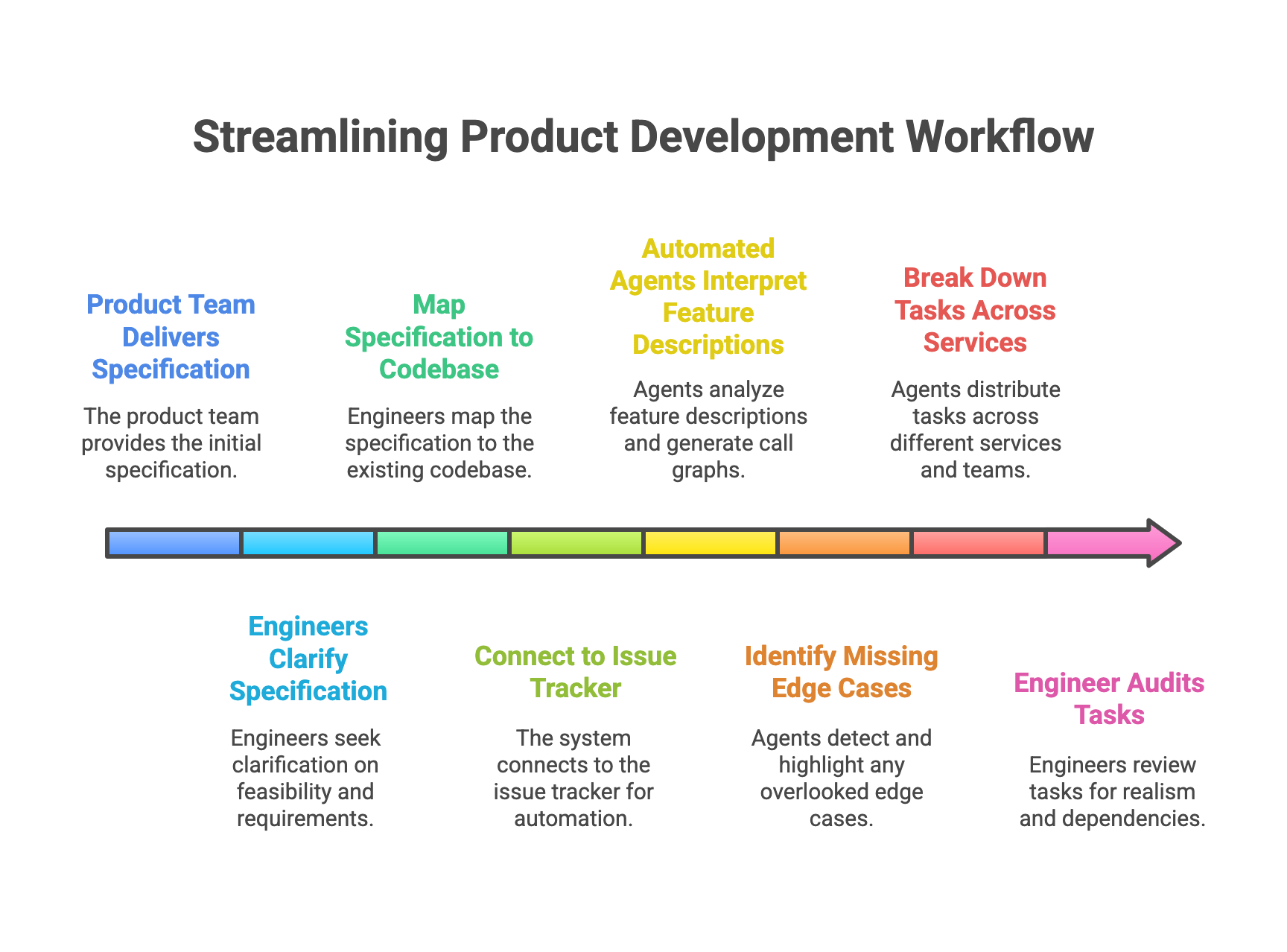

3. Planning: Streamlining Upstream and Downstream Workflows#

In the past, when the product team delivered a specification, engineers faced several cycles of clarification, which included questions like:

- Is this feasible?

- Which services need to be modified?

- How many sprints will this require?

- What legacy constraints are in place?

This process involved mapping a natural-language specification onto an existing codebase graph.

For models, this task is straightforward.

Once connected to your issue tracker, automated agents can:

- Interpret feature descriptions and generate call graphs through the repository.

- Identify missing edge cases.

- Automatically break down tasks across services and teams.

As a result, the engineer's role shifts from explanation to auditing:

- Is the effort estimate realistic?

- Are there unrecognized cross-team dependencies?

- Will this conflict with existing roadmap items?

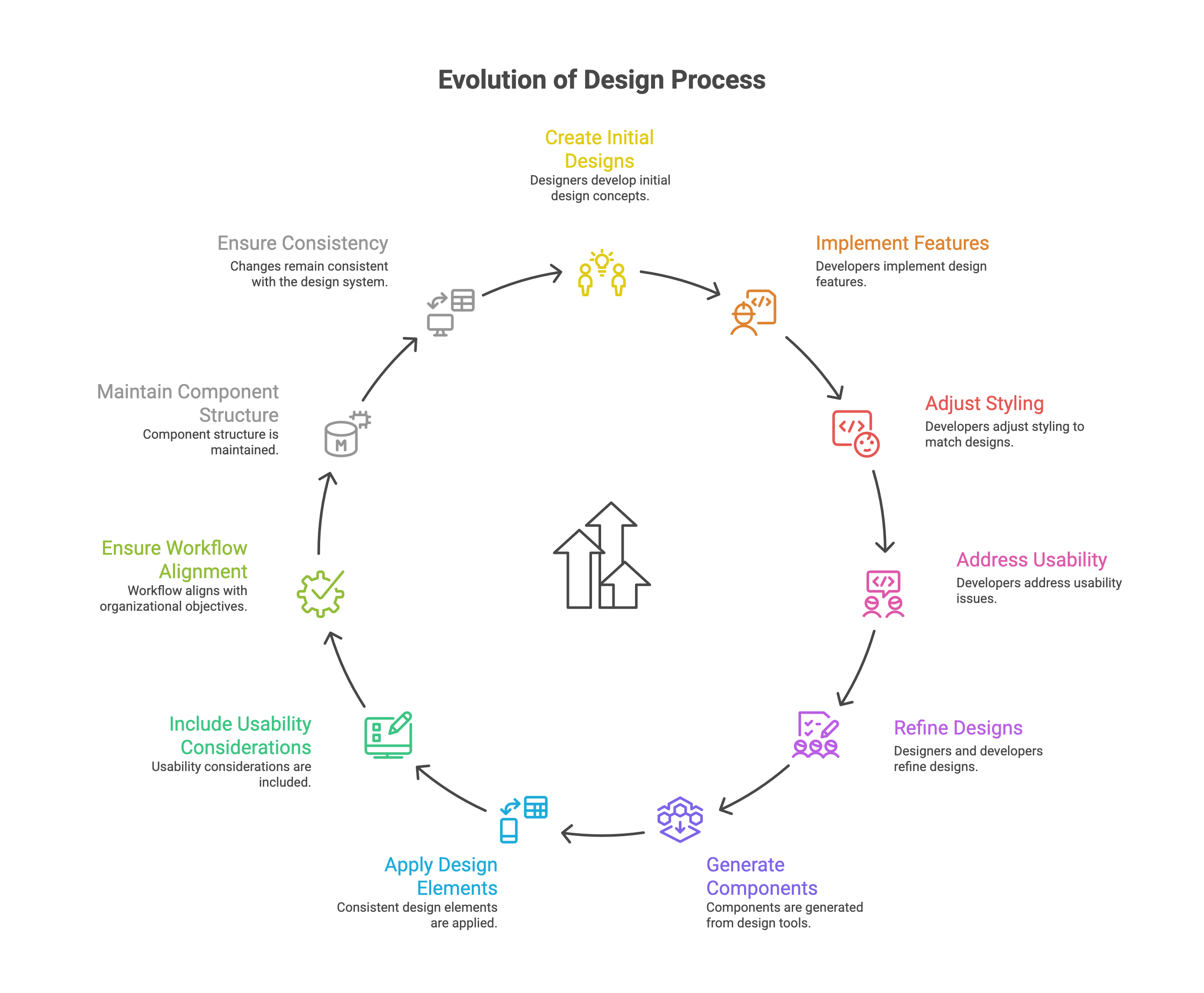

4. Design: From “Implementation Specialist” to “Design System Steward”#

The design process has historically been inefficient, with the introduction of advanced models that can analyze both design assets and code, we can significantly improve this workflow:

- Components can be generated directly from design tools.

- Consistent design elements, such as spacing and typography, are automatically applied.

- Usability considerations (such as accessibility and user flows) are included by default.

This method produces draft components and their underlying structure.

Now, your role has expanded beyond merely executing designs; it's about ensuring that:

- The overall workflow aligns with organizational objectives.

- The structure of the components is maintainable.

- Changes remain consistent with the established design system.

As a result, professionals evolve from being simple "implementers" to becoming stewards of user experience and system coherence.

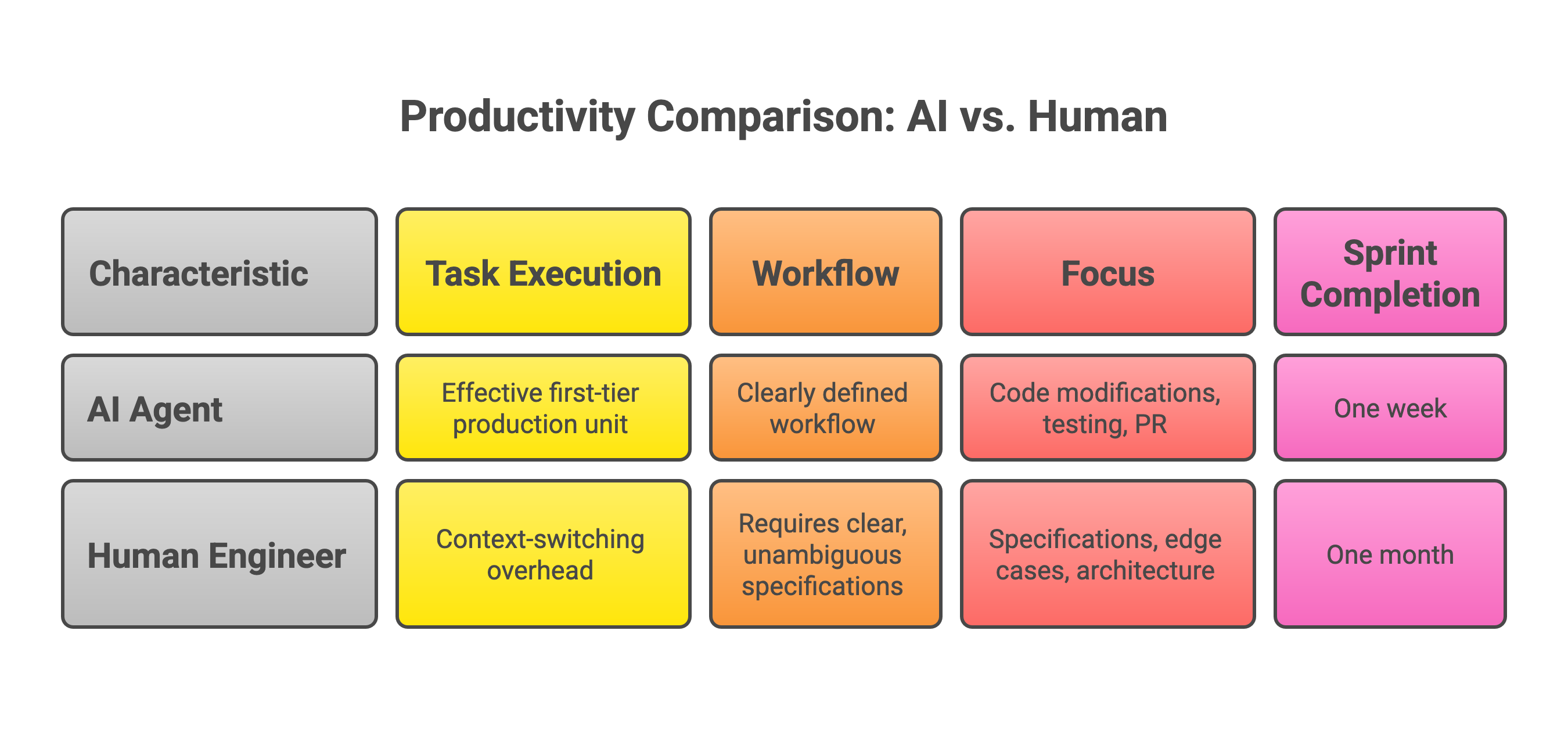

5. Build: Where the Most Significant Productivity Gap Will Arise#

The "Build" phase is where AI acts as the largest multiplier for productivity.

Implementing a new feature involves several tasks:

- Updating and migrating schemas

- Developing APIs and handlers

- Integrating the frontend and handling errors

- Conducting tests, gathering telemetry, and creating documentation

For humans, this process results in context-switching overhead.

For AI models, it translates to longer reasoning times and increased tool usage.

When you provide an AI agent with a clearly defined workflow, such as:

- Write PLAN.md

- Open a feature branch

- Apply all code modifications

- Run tests and linting until the code is clean

- Produce a complete pull request (PR) description

The agent can operate as an effective first-tier production unit.

Human engineers should concentrate on the following:

- Writing specifications that are clear and unambiguous for machines

- Encoding domain-specific edge cases into tests and constraints

- Reviewing architecture, boundaries, and long-term implications

A team that utilizes AI agents can complete a sprint's work in one week, whereas a traditional manual team may take an entire month. This productivity gap cannot be closed by simply working overtime.

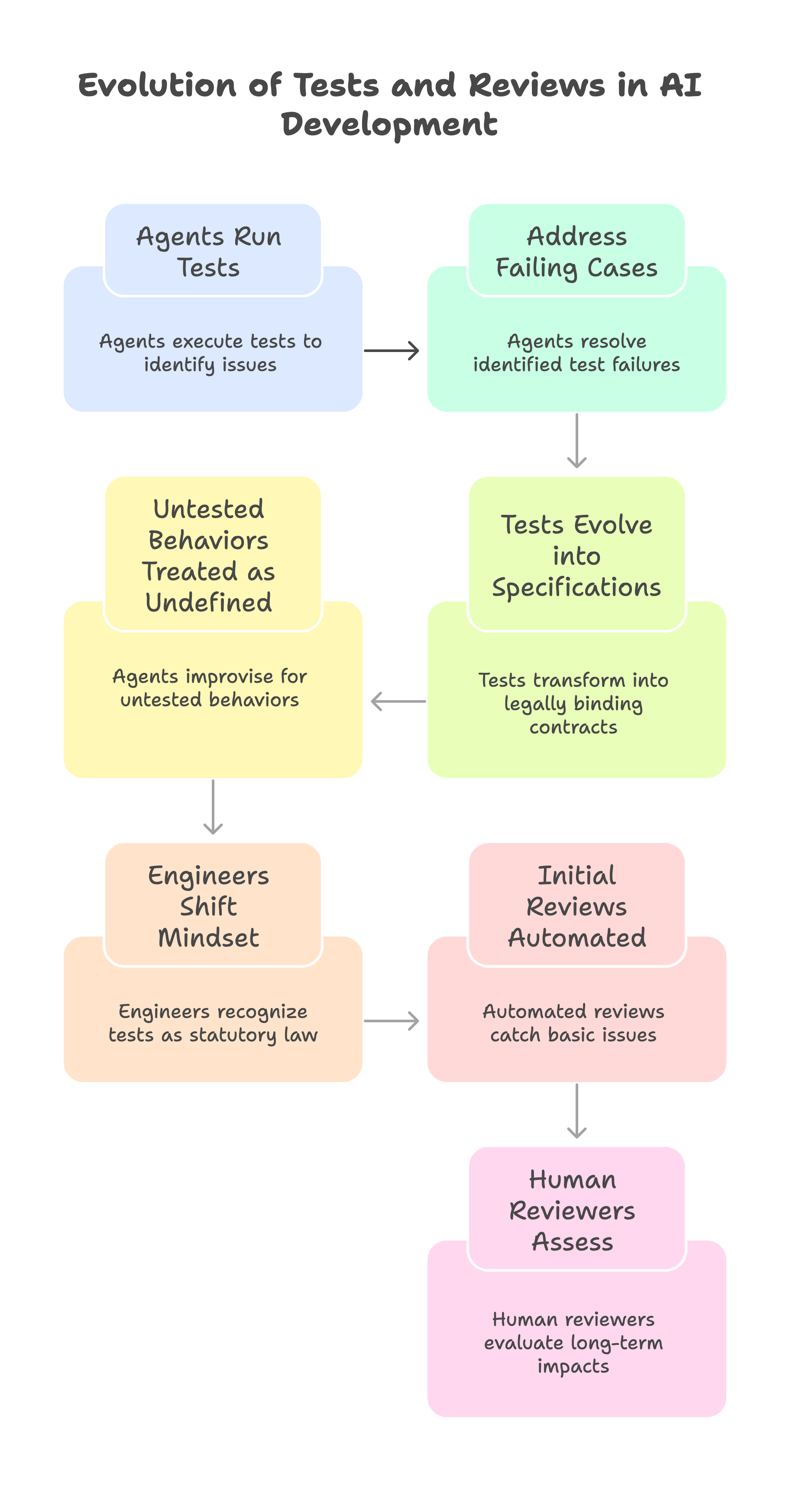

6. Test & Review: Tests Become “Formal Specifications”#

When agents run tests and address failing cases, tests evolve from being mere safety nets into legally binding contracts.

If a behavior is not tested, the agent treats it as undefined and will improvise freely.

Engineers must shift their mindset from viewing testing as tedious to understanding that:

“Tests are statutory law; AI is the executor.”

Similarly, the review process changes:

Initial reviews are automated to catch race conditions, inconsistencies, and obvious bugs.

Human reviewers assess for:

- Future technical debt

- Domain boundary violations

- Long-term architectural impact

Your value lies not in providing comments, but in safeguarding the system's trajectory over the next three years.

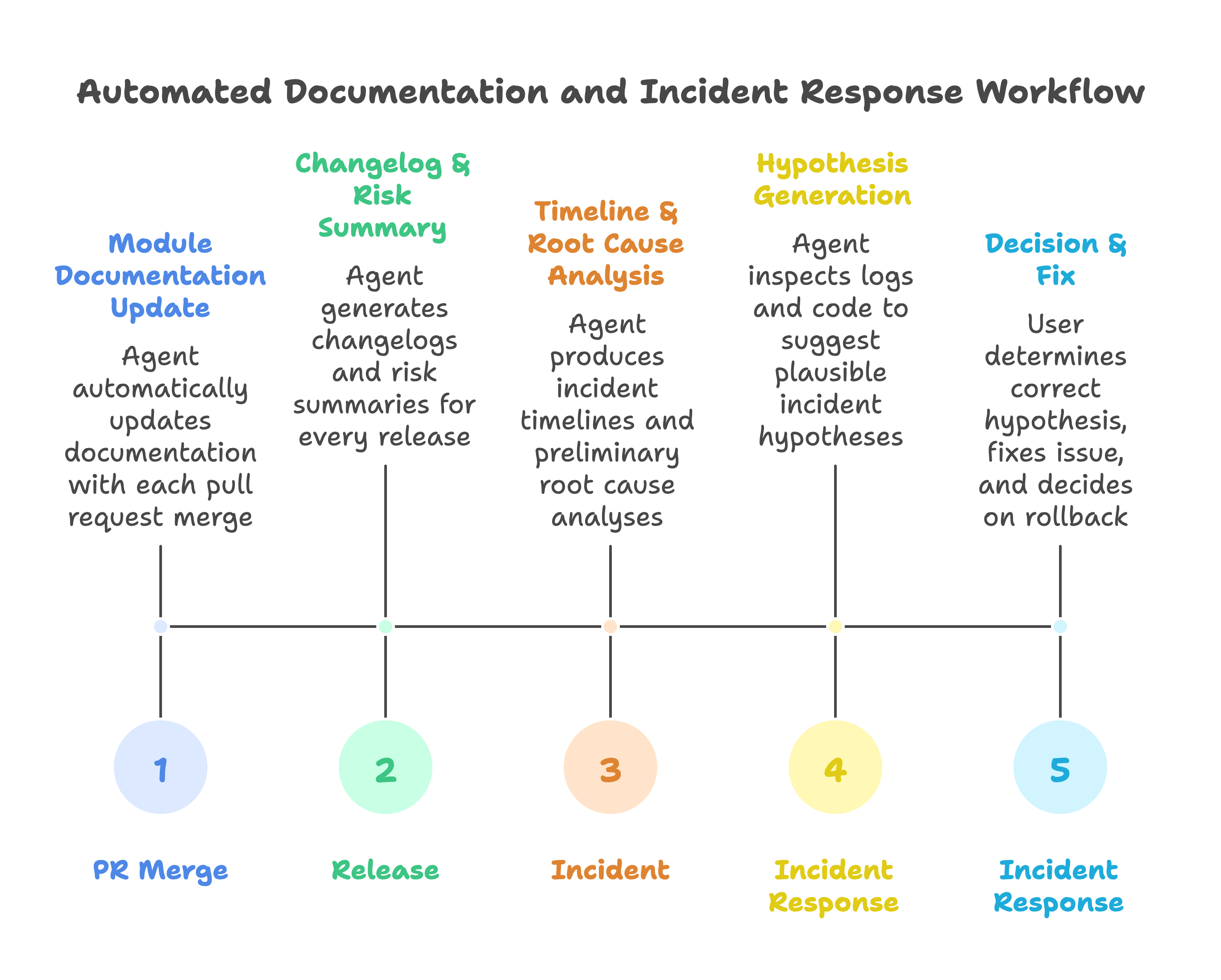

7. Documentation & Operations: Knowledge Becomes an Automated By-Product#

Documentation often fails for several reasons: - There is a lack of time to create and maintain it. - It tends to become outdated quickly. - While postmortem analyses are lengthy, they are rarely revisited.

To address these issues, agents can integrate documentation directly into the workflow by: - Automatically updating module documentation with each pull request (PR) merge. - Generating changelogs and risk summaries for every release. - Producing incident timelines and preliminary root cause analyses.

The question is no longer, “Who writes the documentation?” Instead, we should ask:

“Have we designed templates and standards so that AI-generated knowledge is genuinely useful?”

Incident response will also evolve dramatically. For example, you might ask:

“Can you investigate the spike in the /api/checkout error rate over the last 30 minutes?”

In response, the agent would inspect logs, recent changes, and code paths to produce several plausible hypotheses. You would then determine which hypothesis is correct, how to fix the issue, and whether to roll back changes.

While operations may not become simpler, the more mechanical tasks will be automated, thus allowing you to focus on judgment-driven decisions.

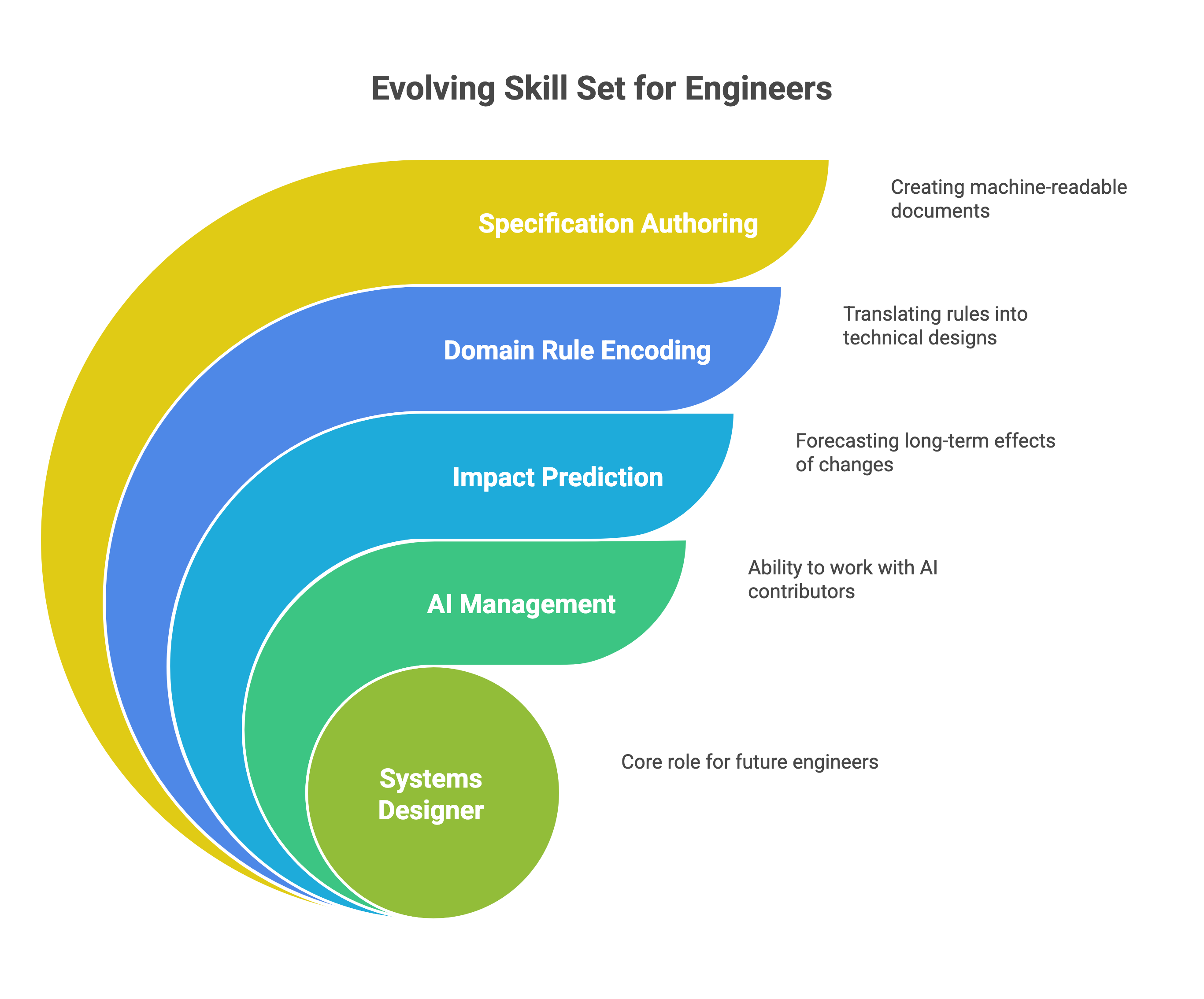

8. For Individual Engineers: Your Core Skill Set Must Evolve#

This article highlights an uncomfortable truth:

“Knowing how to write code” is no longer a distinguishing factor in the field of engineering.

What truly matters now is your ability to: - Author specifications that can be easily parsed by machines (including tests, examples, and constraints) - Encode domain rules into types, tests, and architectural designs - Predict the long-term impact of changes you implement - Manage a team of efficient yet unpredictable AI contributors

AI does not make engineers obsolete. However, engineers who refuse to adapt will risk stagnation.

You are being pushed to transition from a "coder" role to that of a systems designer.

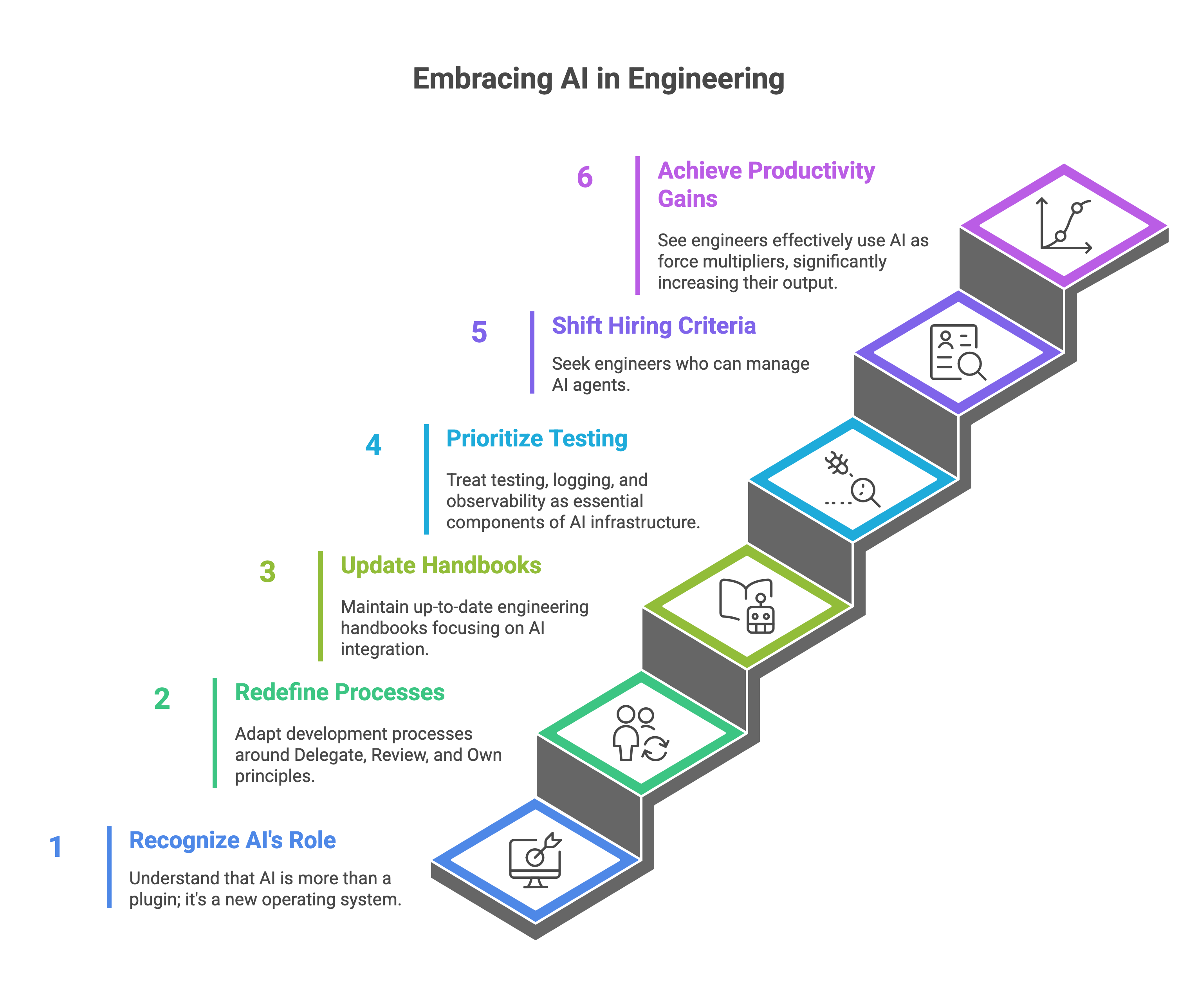

9. For Engineering Leaders: Treating AI as a Plugin Will Leave You Behind#

The key message is clear:

AI is not just a plugin; it’s a new operating system for engineering.

If you limit your approach to just:

- Installing an IDE plugin

- Encouraging casual usage

You will see a divide:

- Some engineers will effectively use AI as force multipliers, significantly increasing their output.

- Others will stick to manual processes, resulting in slower alignment and implementation cycles.

The repercussions are significant:

- Hiring criteria will shift towards seeking “engineers who can manage AI agents.”

- Productivity gaps will widen, potentially leading to differences in output stretching over multiple quarters.

To adapt, leaders must:

- Redefine development processes around the principles of Delegate, Review, and Own.

- Maintain up-to-date engineering handbooks, such as AGENTS.md, that focus on AI integration.

- Treat testing, logging, and observability as essential components of AI infrastructure, not just optional enhancements.

10. Conclusion: Drive Innovation with AI or Play Catch-Up: Shaping the Future of Software Engineering#

This shift isn’t merely an incremental upgrade in tools; it represents a fundamental overhaul of the traditional engineering workflow into an AI-native model.